Give Ai tools wings with good prompts

Home » AI » Give Ai tools wings with good prompts

Writing effective prompts for AI tools such as Bing Chat and ChatGPT is a new skill that requires both creativity and technical understanding. A well-worded prompt can lead the AI to deliver a clear, concise and relevant response. A poorly worded prompt can result in a confusing or incomplete response. Below is a helpful explanation of how to write good prompts, with clear examples and comparisons between effective and ineffective prompts. This is also known as Prompt Engineering.

What is Prompt Engineering?

Prompt Engineering is a discipline within artificial intelligence that focuses on optimizing human-machine interaction through language.

For example, a prompt such as “Give an overview of the French Revolution” may lead to a general historical overview, while a more focused prompt such as “Analyze the impact of the French Revolution on contemporary European politics” will prompt the AI to do a more in-depth and specific analysis.

So Prompt Engineering is not just asking the right questions, but also understanding the underlying mechanisms of AI models to make the interaction as productive as possible.

Tips for writing good prompts:

We also compare the results each time with ChatGPT which uses GPT 3.5 in the free version and the free version of copilot for Microsoft Bing, which uses GPT 4.0. With Copilot, you also get the option between 3 conversation styles. I choose “more creative” each time since I use it by default myself.

Be specific and clear

A good prompt gives the AI clear instructions about what is expected. This means being specific about the topic and context of your query. For example:

- Good prompt: “Can you provide a list of all Nobel Peace Prize winners from 2000 to the present, with a brief description of their contributions?”

- Bad prompt: “list of Nobel laureates”

In the first example, the AI knows exactly what is being asked; a list of Nobel laureates for a specific category and time period, including descriptions. In the second example, the question is too vague. There are multiple categories, of course, and without additional information, the AI cannot determine which information is relevant.

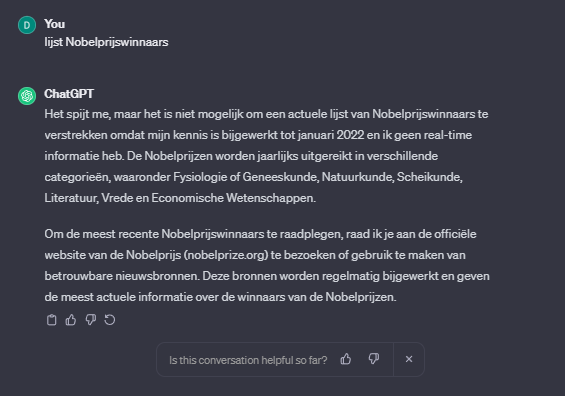

Result ChatGPT (GPT3.5):

Good prompt:

The list stops as early as 2009. This is strange since ChatGPT has knowledge until January 2022. He also indicates this himself at the end of the result.

Bad prompt:

Because of the vague prompt, it doesn’t know exactly where to start and what category it’s about. You don’t even get to see a list.

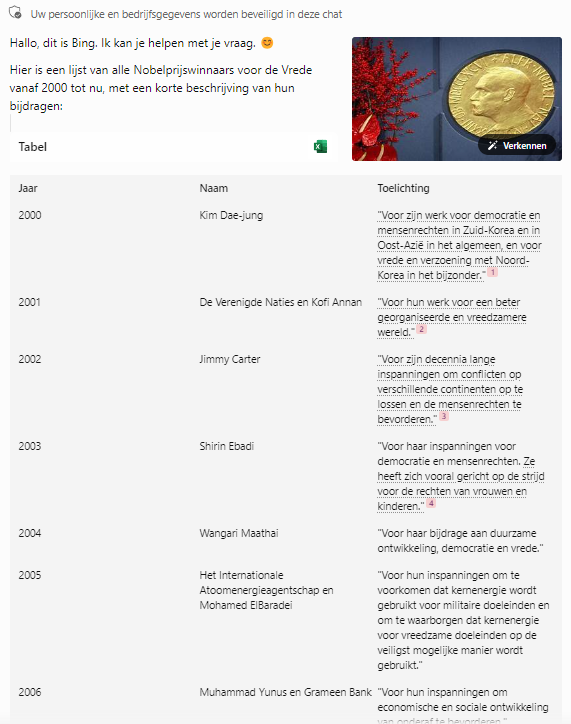

Result Copilot (4.0):

Good prompt:

At Copilot, the list went up to 2021. Not only was the result more accurate, it was put into a table that I could immediately edit in Excel (see Excel logo).

Bad prompt:

With the bad prompt, it’s abundantly clear that he doesn’t know where to start. He does give you suggestions, so he does try to get you to write better and clear prompts that way.

Avoid ambiguity

Make sure your prompt is not open to multiple interpretations. This can lead to responses that do not meet your expectations.

- Good prompt: “What are the steps to submit a U.S. visa application for a tourist visa?”

- Bad prompt: “Apply for Visa America?”

The first example is clear and specific, while the second example is too broad and provides no context.

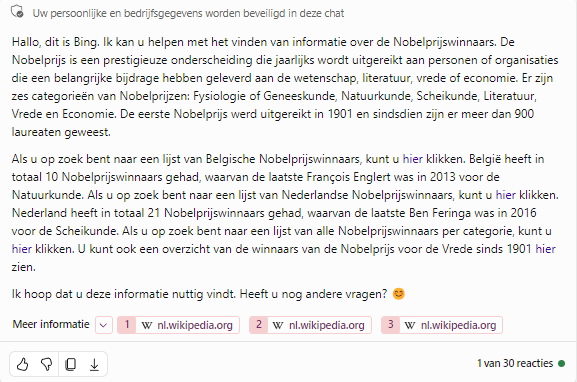

Result ChatGPT (GPT3.5)

Good prompt:

You will immediately get a roadmap that will help you apply for a tourist visa.

Bad prompt:

You still get a helpful roadmap, but because the prompt did not clearly state that it was about a tourist visa, you may get incorrect dates.

Result Copilot (4.0):

Good prompt:

The roadmap is specifically explained. It clearly states what to do and what to expect. Because Copilot also always lists links and resources, it’s also easier to get to the right web pages quickly.

Bad prompt:

No roadmap this time, but interesting that it knows right away that you are a Belgian user. This is useful in this example because applying for a visa involves a different procedure depending on your country.

Be concise

An effective prompt is direct and goes straight to the point without unnecessary details. This helps the AI quickly grasp the gist of the question and provide an appropriate response.

- Good prompt: “Can you summarize the plot of ‘Harry Potter The Philosopher’s Stone’ by J.K. Rowling?”

- Bad prompt: “give a summary of Harry Potter”

The good example asks the AI to perform a specific task: provide a plot summary of a well-known book. The bad example has the AI guessing exactly what the user wants to know. After all, there are several Harry Potter books/movies.

By being concise in your prompts, you avoid unnecessary complexity and make it easier for the AI to understand what you are asking. This results in more efficient and effective communication, getting you the information you need faster.

Result ChatGPT (GPT3.5):

Good prompt:

A general summary of Harry Potter and the Philosopher’s Stone. If you would like more details, you should make that clear in the prompt.

Bad prompt:

Actually a very good result in spite of the vague prompt. Of course, you don’t get the amount of information you expect with such a prompt. That’s why you have to say concisely what you want as a result.

Result Copilot (4.0):

Good prompt:

Like ChatGPT, Copilot also gives you a clear summary.

Bad prompt:

Again, you get a general answer, but that is not bad since the prompt did not clearly state which book you want a summary about.

Expected outcomes

With the good prompts, the AI will give a detailed, relevant answer that directly addresses your question. At the bad prompts, the AI will probably ask for clarification or give a general, less useful answer.

As you have read, Ai tools such as Copilot and ChatGPT can be very useful to help you with various tasks. For example, summaries, procedures and general research. In no time you get valuable and relevant data. This way you speed up your work considerably. Do you use Copilot? Then you will enjoy the latest information every time since this tool is based on what can be found on the web.

Practice makes perfect, so keep experimenting with different wordings to see what works best for your needs.